The Bayesian approach is a beautiful means of statistical inference in general, and phylogenetic inference in particular.

In addition to getting posterior distributions on trees and mutation model parameters, the Bayesian approach has been used to get posteriors on complex model parameters such as geographic location and ancestral population sizes of viruses, among other applications.

The Bayesian approach is a beautiful means of statistical inference in general, and phylogenetic inference in particular.

In addition to getting posterior distributions on trees and mutation model parameters, the Bayesian approach has been used to get posteriors on complex model parameters such as geographic location and ancestral population sizes of viruses, among other applications.

The bummer about Bayesian computation? It takes so darn long for those chains to converge. And what’s worse in this age of in-situ sequencing of viruses for rapidly unfolding epidemics? If you get a new sequence you have to start over from scratch.

I’ve been thinking about this for several years with Aaron Darling, and in particular about Sequential Monte Carlo (SMC) for this application. SMC, also called particle filtering, is a way to get a posterior estimate with a collection of “particles” in a sequential process of reproduction and selection. You can think about it as a probabilistically correct genetic algorithm– one that is guaranteed to sample the correct posterior distribution given an infinite number of particles.

Although SMC has been applied before in phylogenetics, it has not been used in an “online” setting to update posterior distributions as new sequences appear. Aaron and I worked up a proof-of-concept implementation of SMC with Connor McCoy, but when Connor left for Google the implementation lost some steam.

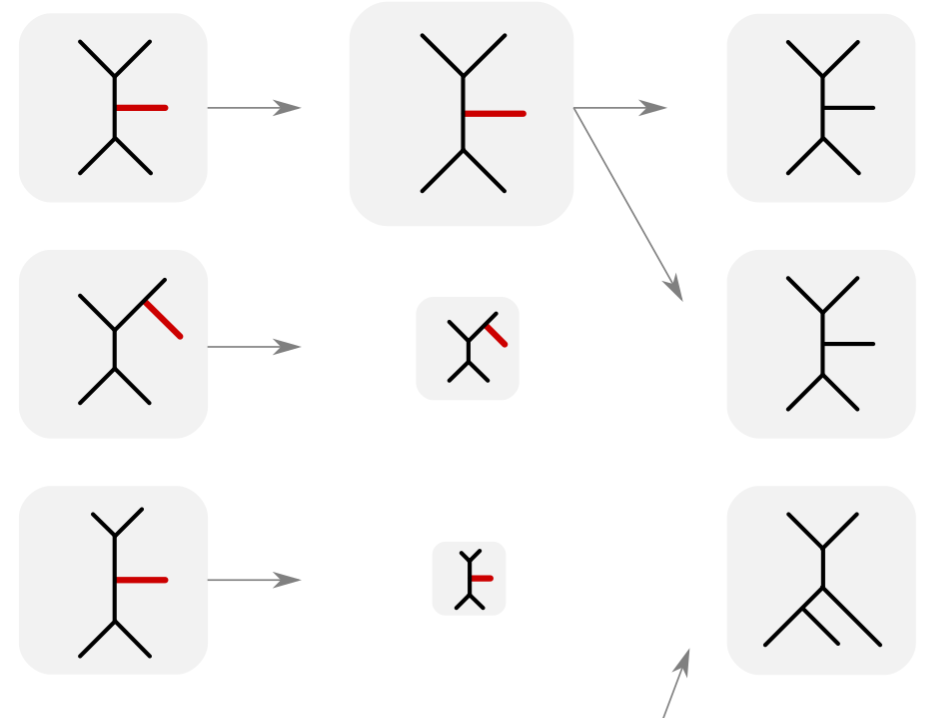

However, when Vu arrived I was still curious about the theory behind our little SMC implementation. Such SMC algorithms raise some really interesting mathematical questions concerning how the phylogenetic likelihood surface changes as new sequences are added to the data set. If it changes radically, then the prospects for SMC are dim. We call this the subtree optimality question: do high-likelihood trees on n taxa arise from attaching branches to high-likelihood trees on n-1 taxa? Years ago I considered a similar question with Angie Cueto but that was for the distance-based objective function behind neighbor-joining, and others have thought about it from the empirical side under the guise of taxon sampling.

As described in an arXiv preprint, Vu developed a theory with some real surprises! First, an induction-y proof leads to consistency: as the number of particles goes to infinity, we maintain a correct posterior at every stage. Then he directly took on the subtree optimality problem by writing out the relevant likelihoods and using bounds. (We think that this is the first theoretical result for likelihood on this question.)

Then the big win: using these bounds on the ratio of likelihoods for the parent and child particles, he was able to show that the effective sample size (ESS) of the sampler is bounded below by a constant multiple of the number of particles. This is pretty neat for two reasons: first, it’s good to know that we are getting a better posterior estimate as we increase our computational effort, and it’s nice that the ESS goes up linearly with this effort. Second, this constant doesn’t depend on the size of the tree, so this bodes well for building big trees by incrementally adding taxa.

Of course, with this sort of theory we can’t get a reasonable estimate on the size of this key constant, and indeed it could be uselessly small. However, I’m still encouraged by these results, and the paper points to some interesting directions. For example, because SMC is continually maintaining an estimate of the posterior distribution, one can mix in MCMC moves in ways that otherwise would violate detailed balance, such as using an MCMC transition kernel that focuses effort around the newly added edge. In this way we might use an “SMC” algorithm with relatively few particles which in practice resembles a principled highly parallel MCMC. On the other hand we might use clever tricks to scale the SMC component up to zillions of particles.

All this strengthens my enthusiasm for continuing this work. Luckily, Aaron has recruited Mathieu Fourment to work on getting a useful implementation, and every day we are getting good news about his improvements. So stay tuned!